The significance of measuring performance in engineering teams cannot be overstated. “What gets measured gets managed.” is a concept that underlines the vital importance of performance measurement within engineering teams. Determining these teams’ efficiency, effectiveness, and progress via clear, precise metrics can determine the difference between project success and failure.

Clear metrics play a crucial role in the success of engineering teams. They offer a quantifiable way to assess the team’s performance and provide valuable insights into their productivity and efficiency. By defining specific metrics, teams better understand their strengths and weaknesses, enabling them to optimize their workflow and deliver higher-quality outcomes.

Various metrics come into play in an engineering department, each serving a unique purpose. Some of these metrics are categorized as Software, Quality, Production, Infrastructure, Security, Product, and Business metrics.

It is not enough to have these metrics; tracking and monitoring them are equally crucial. Regularly analyzing these metrics enables teams to identify trends, detect potential issues early on, and make data-driven decisions promptly. Continuous monitoring empowers teams to take timely actions for improvement, fostering a culture of sustainable excellence and continuous improvement over time.

However, failing to have clear metrics and key performance indicators (KPIs) can lead to several negative consequences. These can range from the inability to make informed, data-driven decisions to a lack of focus caused by unclear objectives and metrics. Pinpointing the root cause of problems without specific parameters becomes difficult and often daunting. We can list them as follows:

-

Impeding Data-Driven Decision-Making: Without measurable data, teams rely on guesswork rather than data-driven insights, leading to subjective decision-making.

-

Creating Lack of Focus: Unclear goals and metrics contribute to a lack of focus among team members, preventing productivity and efficiency.

-

Daunting Root Cause Analysis: The absence of clear metrics makes identifying the root causes of problems challenging, making issue resolution a daunting task.

-

Inadequate Performance Management: Without well-defined metrics, performance management becomes subjective, potentially leading to unfair evaluations and demotivated team members.

-

Hindering Continuous Improvement: The absence of clear metrics obstructs the measurement of progress, impeding the team’s ability to identify improvement opportunities and learn from their experiences.

By understanding the importance of clear metrics and the drawbacks of not having them, engineering teams can set themselves on the path to success. The next step is to explore effective strategies for establishing relevant metrics and ensuring continuous improvement.

Performance Management Frameworks

Objective Key Results (OKRs)

Objective Key Results (OKRs) offer a powerful and flexible approach to performance management. These frameworks are well-suited for organizations operating in dynamic environments. One of the key strengths of OKRs lies in their adaptability, allowing companies to respond swiftly to shifting circumstances.

The OKR system promotes alignment and engagement across the organization, empowering employees to take ownership of their roles and actively contribute to the achievement of overarching company goals.

Objectives:

Clear and specific objectives are the foundation of the OKR. Each objective should be clear and precise, with an outcome that can be objectively assessed. Moreover, objectives need to be time-bounded, establishing a specific deadline for their completion. This time-bound aspect encourages a sense of urgency and ensures that efforts remain focused on achieving the desired results within the specified timeframe.

Key Results:

Key results play an important role in the OKR. These are specific and measurable outcomes that show progress toward attaining an objective. When dealing with a challenging goal, breaking it down into measurable key results is essential. For instance, if the objective is to boost the sales performance of an e-commerce company by 30% in the next three months, key results may include metrics like new customer acquisition, existing customer retention, successful transactions, conversion rates, and more. Fundamentally, key results simplify larger objectives into specific, measurable results, making progress tracking and necessary modifications more manageable.

OKR Example: Improving the Performance of swtestacademy

Objective: Improve the performance of swtestacademy.

Key Results:

Increase website speed by 10% in the next quarter, measured on https://pagespeed.web.dev/

First Contentful Paint value less than 1.8.

Largest Content Paint value less than 1.9.

Cumulative Layout Shift less than 0.05.

Total Blocking Time less than 0 ms.

Speed index less than 1.8.

Reduce bounce rate by 10%.

Achieve an overall website score of 98+ on Google Page Speed.

Achieve an overall website score of 95+ (Grade A) on GTMetrix.

Achieve a performance grade of minimum B (80+) on Pingdom.

Key Performance Indicators (KPIs)

Key Performance Indicators (KPIs) are invaluable tools for organizations prioritizing efficiency and optimization. These metrics are carefully crafted to be specific and measurable, allowing companies to track performance effectively and identify areas for enhancement. Organizations adopt KPIs to gain valuable insights into their health and performance, enabling informed decision-making based on crucial data.

Many KPIs are available, offering organizations various options to tailor them to their needs. KPIs can be created using different methodologies such as DORA (DevOps Research and Assessment), Quality, DevOps, and SPACE metrics, or by collaborating with other departments to align goals and objectives.

By implementing KPIs, organizations can foster a culture of continuous improvement, enabling them to stay competitive and achieve sustainable success. These performance indicators are instrumental in keeping teams focused on their objectives and driving overall progress.

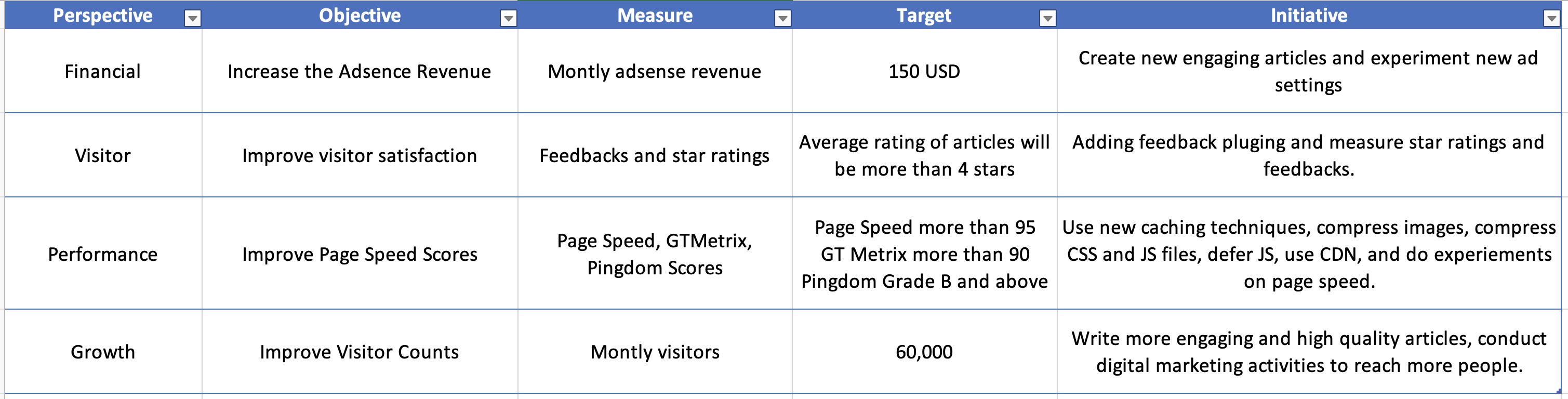

Balanced Scorecards

The Balanced Scorecard is an ideal tool for organizations that focuses on balance and strategic alignment. It offers a holistic view of the organization’s health and performance by integrating financial and non-financial metrics. This comprehensive approach ensures that organizations consider a wide range of factors when evaluating their success.

By adopting the Balanced Scorecard, organizations gain a more balanced perspective on their performance. This strategic approach enables better decision-making and fosters a deeper understanding of the organization’s performance.

The Balanced Scorecard methodology facilitates the alignment of various departments and teams with the organization’s strategic goals, ensuring that efforts are synchronized and contribute to the organization’s vision. This balance and alignment emphasize empower organizations to thrive in dynamic and competitive environments.

Balanced Score Card for SW Test Academy

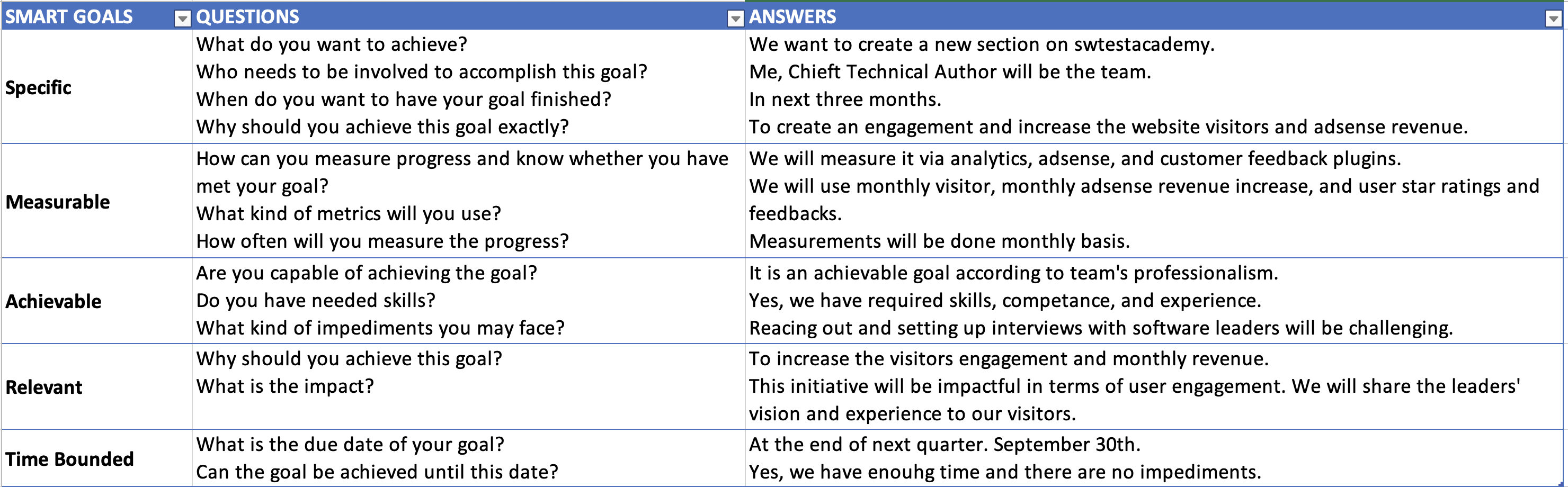

SMART Goals

SMART Goals are vital for organizations aiming for clear, precise objectives. They emphasize specificity and quantifiability, making them ideal for organizations that desire transparent and feasible targets. SMART Goals also offer a structured approach, promoting the division of larger goals into smaller, more digestible tasks.

Here’s a succinct breakdown of the SMART acronym:

-

Specific: Instead of vaguely aiming to “boost AdSense revenue,” set a distinct goal like “increase the AdSense revenue by 15% within the forthcoming quarter.”

-

Measurable: These goals should be quantifiable. Whether it’s through analytical tools or feedback forms for customer satisfaction, measurable metrics are crucial.

-

Achievable: While goals should be challenging, they must also be realistic to prevent undue stress or stagnation.

-

Relevant: Any goal set should correlate with the company’s overarching objectives, mission, and values. It ensures efforts aren’t scattered but focused on vital organizational aims.

-

Time-Bound: Attaching a deadline to goals fosters urgency and accountability. Deadlines should be practical, offering enough time for completion without compromising on quality.

In essence, the SMART framework provides a blueprint for effective goal-setting. By adhering to its principles, organizations can streamline their objectives and set a clear path to success.

SMART Goals for SW Test Academy

Goal Description

Create a new section about interviewing software thought leaders that will increase monthly visitors and create positive engagement.

Other Metrics & KPIs in Software Engineering and overall IT

Measuring Software Quality

-

Static Code Analysis:

Static Code Analysis involves evaluating the source code without running the program. It checks for code correctness, potential vulnerabilities, and code quality. Employing static code analysis tools early in the development process can save both time and money, preventing costly late-stage bug fixes.

Example: We can use SonarQube to analyze the codebase, discover software quality problems like hard-coded passwords, and fix them before deployment, not let them be a security concern.

-

Code Complexity:

This metric measures the intricacy of the code. Code with high complexity is tougher to maintain and more prone to bugs. Monitoring and managing complexity ensure our code remains maintainable.

Example: By using cyclomatic complexity tools, a development team can identify a module with soaring cyclomatic complexity and refactors it, simplifying both the code and future maintenance.

-

Technical Debt:

It represents the trade-off when choosing a quicker yet less optimal solution. Monitoring technical debt aids teams in making informed decisions on when to refactor or when to push ahead.

Example: A team can deploy an app with known inefficiencies to meet a deadline. They track this as technical debt, planning a future sprint to address and optimize these areas.

-

Code Smells:

These patterns in the code indicate potential problems. While they don’t cause program failure, they can point to deeper issues or future vulnerabilities.

Example: During a code review, a developer points out repeated code parts, suggesting a need for better object decomposition. DRY (Don’t Repeat Yourself.)

-

Code Coverage:

It indicates the percentage of the codebase tested by automated tests. High coverage doesn’t guarantee the absence of defects but helps in identifying untested paths.

Example: After integrating JaCoCo with their CI pipeline, a team identifies several critical paths in their application not covered by tests, leading them to write necessary test cases.

-

Mutation Score:

A metric assessing the efficacy of tests by deliberately introducing bugs and seeing if tests catch them. It ensures the robustness of test suites.

Example: A team using PI Test discovers their tests aren’t catching many introduced mutations, leading to an overhaul of their testing strategy.

-

Test and Test Automation Results:

Automated tests produce outcomes indicating software health. Regularly inspecting these outcomes ensures software reliability and guides iterative development.

Example: An e-commerce platform runs Unit, Component, Contract, Integration, Visual, and UI (Selenium/PlayWright/Cypress) test automation suites, ensuring that key user flows, like the checkout process, UI, and integrations remain bug-free.

-

Test Automation Coverage:

This differentiates automated tests from manual ones. Increasing automation accelerates feedback loops and eases regression testing. Ideally, we should automate the tests as much as possible.

Example: A company realizes that 30% of its regression tests are manual, leading to slower releases. They invest in automating these to shorten their release cycle time.

-

Performance Testing Metrics:

Latency: Time taken to process a request. Low latency ensures responsive applications.

Throughput: Number of requests handled simultaneously per unit of time, like per second. High throughput is crucial for e-commerce companies that have millions of visitors.

Some performance testing types should also be conducted, like endurance, spike, stress, memory leak, etc.

Example: A streaming service, after observing spikes in latency during peak hours using tools like JMeter, scales its infrastructure to handle more concurrent users, ensuring smooth streaming even during high demand.

-

Server Uptime:

It depicts the operational time without outages. High uptime means reliable services vital for businesses.

Example: A cloud service provider advertises 99.99% uptime, meaning their services are guaranteed to be unavailable for no more than 52.6 minutes in a year.

- DB Availability:

Refers to the continuous accessibility of a database. Critical for operations relying on real-time data retrieval.

Example: After facing a database outage leading to booking failures, an online reservation system migrates to a more robust database solution with higher availability guarantees.

- Network Uptime:

Indicates the reliability of network connections. Pivotal for both internal and external services.

Example: An ISP promises 99.5% network uptime, ensuring customers their internet would be down for no more than 43.8 hours in a year.

- Security/Vulnerability Results:

The outcome of security scans reveals potential weak spots. Regularly addressing vulnerabilities ensures robust defenses.

Example: After a routine vulnerability scan using tools like Nessus and ZAP, a company can discover potential exploits in their online portals and promptly addresses them, averting potential breaches.

- Defect Metrics:

Comprehensive metrics provide insight into software quality, guiding improvements.

Example: A mobile game studio reviews defect metrics, notices a spike in crash reports after a particular release, and swiftly rolls out a fix.

- Change Failure Rate (DORA):

Represents deployment-related failures. Low rates suggest efficient testing and deployment practices.

Example: An online retailer tracks a change failure rate of only 2%, indicating that 98% of their deployments occur without incidents, boosting stakeholder confidence.

Measuring Delivery Performance

-

Burn Down Charts:

A visual representation of work left to do versus time. It’s a dynamic chart that shows whether a team is on track to complete tasks within a set timeframe, like in a sprint. A steadily descending chart indicates a team on track, while any spikes could suggest obstacles.

Example: Halfway through a sprint, a software development team consults their burn-down chart and notices a significant deviation from the predicted line, hinting at potential delays or underestimations.

-

Team Velocity:

This metric indicates the amount of work a team completes during a sprint. Predictability and consistent delivery are established by measuring team velocity over several sprints.

Example: After three sprints, a team notices their velocity stabilizing around 35 story points per sprint, helping them make better future commitments.

-

Committed vs. Delivered Stories:

This metric contrasts the work a team committed to versus what was actually completed. It assists in understanding team estimation accuracy and sets realistic expectations.

Example: At the end of a sprint, a team reviews that they committed to 10 stories but completed only 8, prompting a discussion on improving estimations or addressing bottlenecks.

-

Time to Market:

Represents the duration from idea conception to its availability to customers. Reducing time to market provides a competitive edge and quicker feedback loops. Faster time to market means testing, developing, and releasing the software artifacts to the production environment as quickly as possible to gain a competitive advantage and provide new features to the customers. Faster time to market can be achieved by implementing a shift-left approach and early testing.

As per its name, shift-left means moving the development and testing activities to the early stages of the SDLC. For example, moving the tests, styling checks, dependency checks, static code analysis, security and vulnerability scans, performance tests, and similar activities after committing to new code.

Early testing refers to testing the software as early as possible. It is also one of the principles of software testing. We can identify and solve the defects by testing the software early before proceeding to the later stages. This way, we will reduce the delays by releasing the new software features to the production environment.

Example: A startup eager to launch its new app focuses on reducing time-to-market. Through effective planning and execution, they introduce their app in just four months, gaining a head start over competitors.

-

Lead Time:

The span from the customer’s request to its fulfillment. A shorter lead time enhances customer satisfaction by delivering solutions promptly.

Example: An e-commerce platform, by optimizing its supply chain and logistics, cuts down the lead time from order placement to delivery, resulting in positive customer feedback.

-

Cycle Time:

This metric covers the period a task enters the ‘in-progress’ phase to its completion. Tracking cycle time helps in identifying process efficiency and potential areas of improvement.

Example: A company, upon reviewing its cycle time, realizes that feedback loops are causing delays, leading to a streamlined feedback process.

-

Lead Time for Changes (DORA):

Represents the time taken to go from code commit to code successfully running in production. Optimizing this metric ensures quicker feature releases and bug fixes.

Example: An online platform, after integrating CI/CD pipelines, reduces its lead time for changes from weeks to mere days, allowing faster response to market needs.

-

Deployment Frequency (DORA):

Indicates how often deployments occur. High deployment frequency generally signifies a mature CI/CD process and agile methodologies in action.

Example: A fintech firm moves from monthly to daily deployments, showcasing its robust development practices and ability to adapt to market demands quickly.

-

Mean Time to Resolve (DORA):

The average time taken to resolve a defect or outage. A shorter MTTR ensures business continuity and preserves customer trust.

Example: An online company, after a major outage, reviews its MTTR and invests in dedicated rapid response teams, significantly cutting down its resolution times.

Measuring Collaboration

-

Knowledge Sharing:

Sharing knowledge strengthens team cohesion, accelerates learning, and promotes innovative thinking. It’s a vital component in fostering a culture of continuous improvement.

-

-

Attending and Talking at Conferences and Webinars:

- Active participation in conferences as attendees or speakers enhances exposure to new ideas and provides platforms for showcasing organizational expertise.

- Example: Developers from a tech company attend a leading AI conference. Upon return, they share cutting-edge techniques and methodologies they’ve learned, improving the company’s AI capabilities.

-

Blog posts:

- Writing and sharing insights through blogs help establish thought leadership and provide valuable insights to the community.

- Example: Onur Baskirt (me) writes blogs about recent advancements in software engineering and leadership to share his experience and thoughts with the community.

-

Internal Training:

- Conducting or attending internal training sessions accelerates the transfer of expertise within an organization, ensuring all team members are equipped with the necessary skills and knowledge.

- Example: A cybersecurity firm conducts bi-monthly internal training on emerging threats and mitigation techniques, ensuring that every team member is always updated and ready to handle new challenges.

-

-

Pair Programming:

This agile software development technique involves two programmers working at one workstation at the same desk or online session using desktop sharing. One writes the code while the other reviews each line. It enhances code quality, fosters learning, and promotes a collaborative culture.

Example: A company can adopt pair programming, and within a few months, they can notice a significant reduction in code bugs and an improvement in feature delivery speed, thanks to the combined expertise and immediate code reviews.

-

Open Source Contributions:

Contributing to open-source projects showcases an organization’s commitment to community development. It also sharpens team skills, as they’re exposed to diverse coding practices and feedback from the global developer community.

Example: Engineers from a cloud service provider actively contribute to the Kubernetes project. This enhances their internal Kubernetes expertise and positions the company as a key player in the cloud orchestration community.

Measuring Product Performance

-

Customer Satisfaction/Reviews:

Gauging customer satisfaction provides insights into product value and areas needing improvement. Reviews, both positive and negative, act as feedback mechanisms and reflect the product’s market standing.

Example: An e-commerce platform can regularly collect reviews after purchases. After analyzing feedback, they can introduce a faster checkout process, leading to higher customer satisfaction scores in subsequent reviews.

-

Conversion Rate:

This metric illustrates the percentage of users who take a desired action, such as making a purchase or signing up. A high conversion rate often indicates effective marketing and product value proposition.

Example: A SaaS company can tweak its landing page design and messaging. Over the next month, they can observe a significant increase in their conversion rate.

-

Retention Rate:

It measures the percentage of customers who continue using a product over time. A high retention rate indicates product stickiness and customer loyalty, while a declining rate may signal issues requiring attention.

Example: An online gaming platform, after introducing new features, can see its three-month retention rate climb, suggesting the new additions enhanced user experience and value.

-

User Engagement:

This evaluates how actively users interact with a product. Metrics can include session duration, frequency of use, or feature interactions. High engagement often correlates with product value and user satisfaction.

Example: A fitness tracking app can post a new update and see users logging their workouts more frequently and exploring more in-app features. This spike in user engagement indicates the update’s success in providing more value to users.

Regularly measuring product performance through these metrics enables businesses to continually refine their offerings, ensuring alignment with user needs and expectations, ultimately driving success in competitive markets.

Measuring Operational Performance

- Employee Retention Rate:

A crucial metric that determines the percentage of employees who stay with the company over a specified period. A high retention rate suggests a positive work environment, competitive compensation, and effective management.

Example: If a company finds its employee retention rate dropping, it could introduce better benefits, flexible work arrangements, or tailored training programs to reverse the trend and ensure they retain top talent.

-

Employee Satisfaction:

This metric measures the contentment and morale of employees within an organization. Regular surveys, interviews, and feedback mechanisms are tools to measure this. High employee satisfaction often translates to increased productivity and better company culture.

Example: A company, after a dip in employee satisfaction scores, can initiate town hall meetings and open forums to address concerns and gather suggestions, fostering an environment where employees feel heard and valued.

-

360 Feedbacks:

A comprehensive review system where employees receive feedback from their peers, subordinates, and supervisors. It provides a holistic view of an employee’s performance, strengths, and areas of improvement.

Example: By integrating 360 feedback into its annual review process, a company can better understand team dynamics and individual contributions, guiding its training and development initiatives more effectively.

Measuring operational performance through these metrics helps companies enhance their internal processes and culture and sets them up for long-term success by ensuring a motivated and satisfied workforce.

Conclusion

In the realm of IT and software engineering, there are countless other metrics that can be instrumental in measuring performance. It’s paramount to remember that while these metrics provide a foundational understanding, it’s essential to fine-tune them according to your company’s unique culture, conditions, and specific objectives. By customizing these metrics to fit your organizational landscape, you can ensure a more accurate, relevant, and actionable understanding of performance, setting the stage for continual growth and improvement.

Thanks,

Onur Baskirt

Onur Baskirt is a Software Engineering Leader with international experience in world-class companies. Now, he is a Software Engineering Lead at Emirates Airlines in Dubai.