In this article, I describe how to integrate Tesults with pytest for reporting, specifically using pytest conftest files. I will assume you already have testing setup with pytest. The official pytest documentation is located here. Let’s get started integrating Tesults into our test automation project. Here are the details for Tesults and pytest step by step.

Step 1 – Sign Up

Tesults is a web-based (cloud hosted) app which means that unlike Allure or ExtentReports there is nothing to setup with respect to infrastructure or software but it does require signing up. Go to Tesults and sign up.

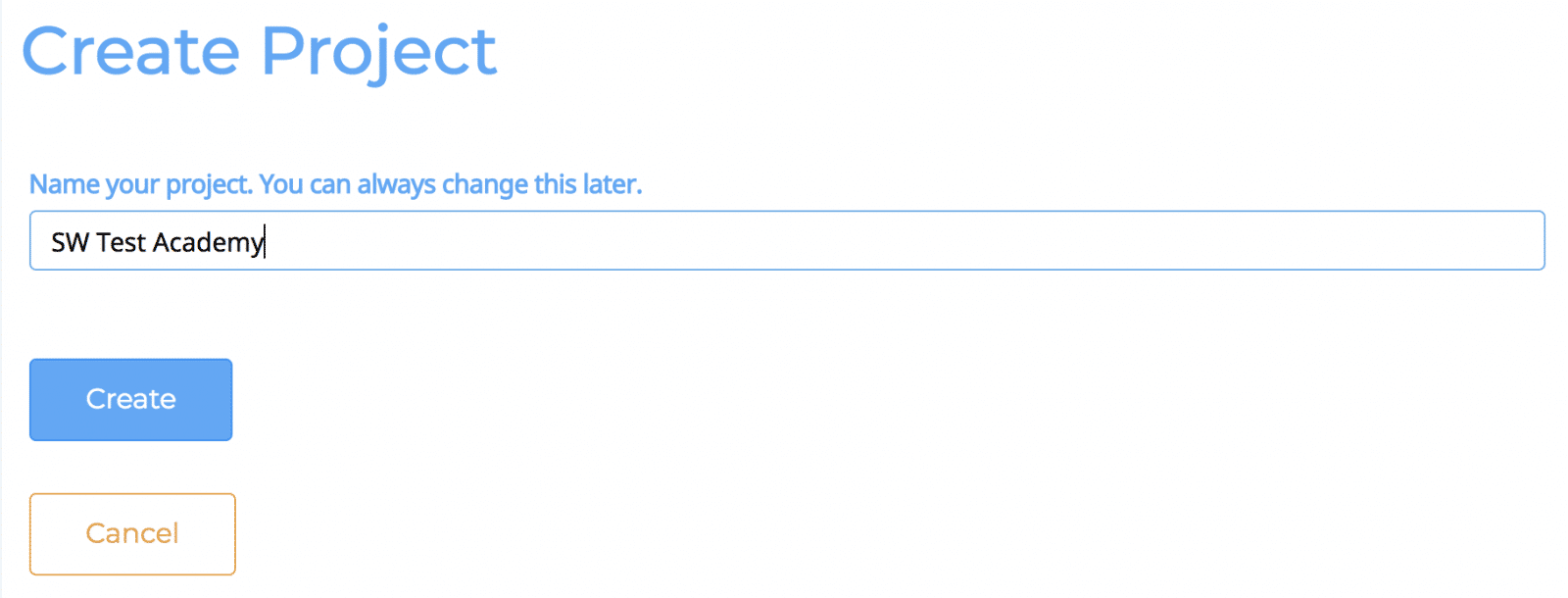

Step 2 – Create a Project

After signing up, login and click the settings (middle) icon from the top right menu bar.

This opens up the settings/configuration. You need to create at least one project to start reporting results. Click create a project and name the project.

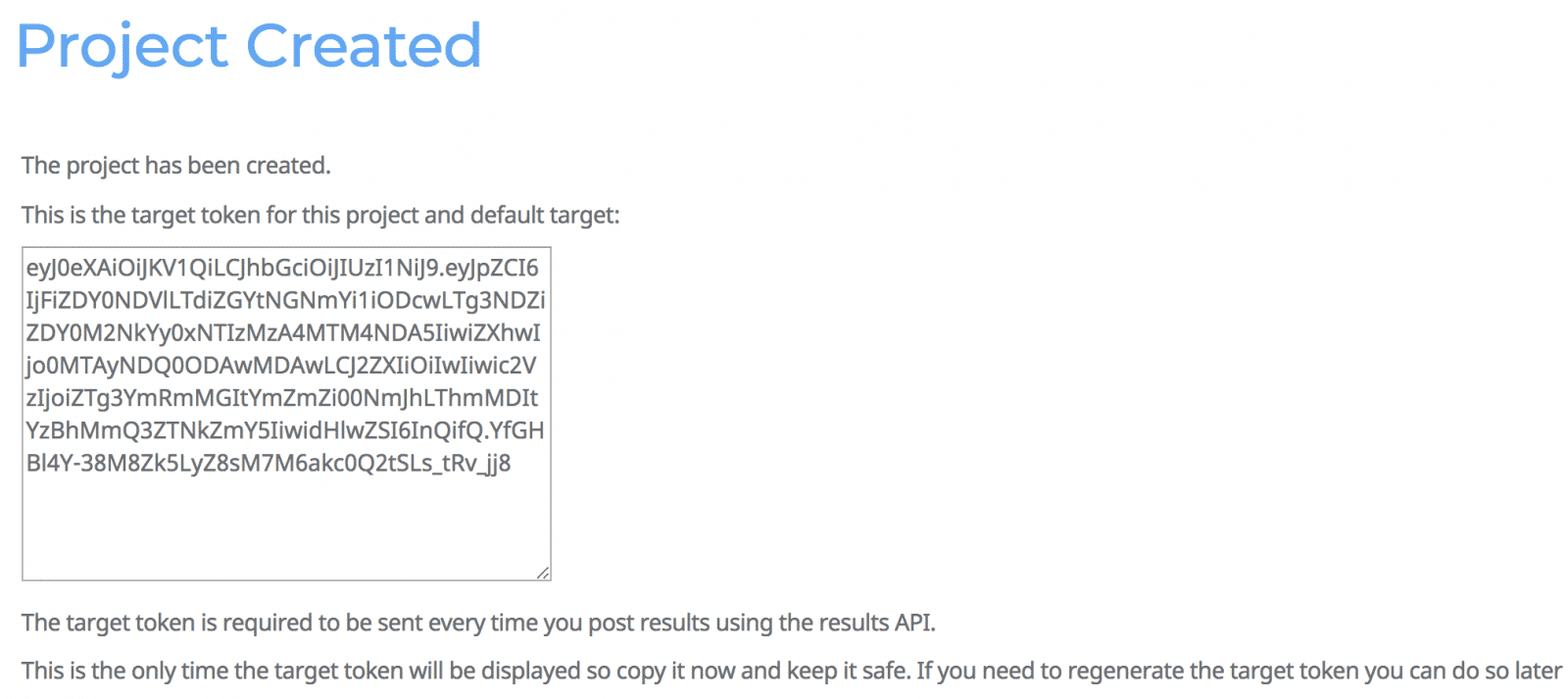

Finish creating the project by selecting a free or paid plan. A token is provided:

You must copy this token because it is needed later in the code to upload results from pytest.

Step 3 – Add a conftest.py file to the pytest test directory

Tesults provides documentation for reporting results with Python here and for pytest specifically here.

I will outline the process here. Install the Tesults Python API library using pip:

pip install tesults

Next, create a new python file called conftest.py. This is considered a ‘local plug-in’ by pytest. For more detailed information about conftest.py see this link.

The conftest.py can contain ‘hook’ functions that pytest automatically runs as your tests run (the file must have the name conftest.py for pytest to recognize it). To record results and upload them to Tesults we need to implement two hook functions: pytest_runtest_protocol and pytest_unconfigure both documented here.

Rather than write custom code you can copy and paste the conftest.py from Tesults. You need to replace the value of ‘token’ in line 8 with the token you generated on creating your project. You should not have to edit anything else.

You can place the conftest.py in a specific test directory or if you want to upload results across multiple test directories, move the conftest.py to the higher level directory.

import tesults

import sys

import os

from _pytest.runner import runtestprotocol

# The data variable holds test results and tesults target information, at the end of test run it is uploaded to tesults for reporting.

data = {

'target': 'token',

'results': { 'cases': [] }

}

# Converts pytest test outcome to a tesults friendly result (for example pytest uses 'passed', tesults uses 'pass')

def tesultsFriendlyResult (outcome):

if (outcome == 'passed'):

return 'pass'

elif (outcome == 'failed'):

return 'fail'

else:

return 'unknown'

# Extracts test failure reason

def reasonForFailure (report):

if report.outcome == 'passed':

return ''

else:

return report.longreprtext

def paramsForTest (item):

paramKeys = item.get_marker('parametrize')

if (paramKeys):

paramKeys = paramKeys.args[0]

params = {}

values = item.name.split('[')

if len(values) > 1:

values = values[1]

values = values[:-1] # removes ']'

values = values.split("-") # values now separated

else:

return None

for key in paramKeys:

if (len(values) > 0):

params[key] = values.pop(0)

return params

else:

return None

def filesForTest (item):

files = []

path = os.path.join(os.path.dirname(os.path.realpath(__file__)), "path-to-test-generated-files", item.name)

if os.path.isdir(path):

for dirpath, dirnames, filenames in os.walk(path):

for file in filenames:

files.append(os.path.join(path, file))

return files

# A pytest hook, called by pytest automatically - used to extract test case data and append it to the data global variable defined above.

def pytest_runtest_protocol(item, nextitem):

global data

reports = runtestprotocol(item, nextitem=nextitem)

for report in reports:

if report.when == 'call':

testcase = {

'name': item.name,

'result': tesultsFriendlyResult(report.outcome),

'suite': str(item.parent),

'desc': item.name,

'reason': reasonForFailure(report)

}

files = filesForTest(item)

if len(files) > 0:

testcase['files'] = files

params = paramsForTest(item)

if (params):

testcase['params'] = params

testname = item.name.split('[')

if len(testname) > 1:

testcase['name'] = testname[0]

paramDesc = item.get_marker('description')

if (paramDesc):

testcase['desc'] = paramDesc.args[0]

data['results']['cases'].append(testcase)

return True

# A pytest hook, called by pytest automatically - used to upload test results to tesults.

def pytest_unconfigure (config):

global data

print ('-----Tesults output-----')

if len(data['results']['cases']) > 0:

print ('data: ' + str(data))

ret = tesults.results(data)

print ('success: ' + str(ret['success']))

print ('message: ' + str(ret['message']))

print ('warnings: ' + str(ret['warnings']))

print ('errors: ' + str(ret['errors']))

else:

print ('No test results.')

I mentioned that the conftest.py only needs to implement two functions and this does that, but there are also additional helper functions in the code above that take care of automatically reporting complete test details.

This mostly just works automatically, the only thing you have to consider is that if you want a test description to be uploaded include this line above each of your test functions:

@pytest.mark.description(“your test description”)

Also for parameterized test cases, add something similar to:

@pytest.mark.parametrize((“test_input”,”expected”), [(“3+5”, 8),(“2+4”, 6),(“6*9”, 42),])

That completes the integration. Now run the tests.

Step 4 – Run the tests and generate the report on Tesults

Run the tests by running tests the same way you always do in pytest:

python -m pytest

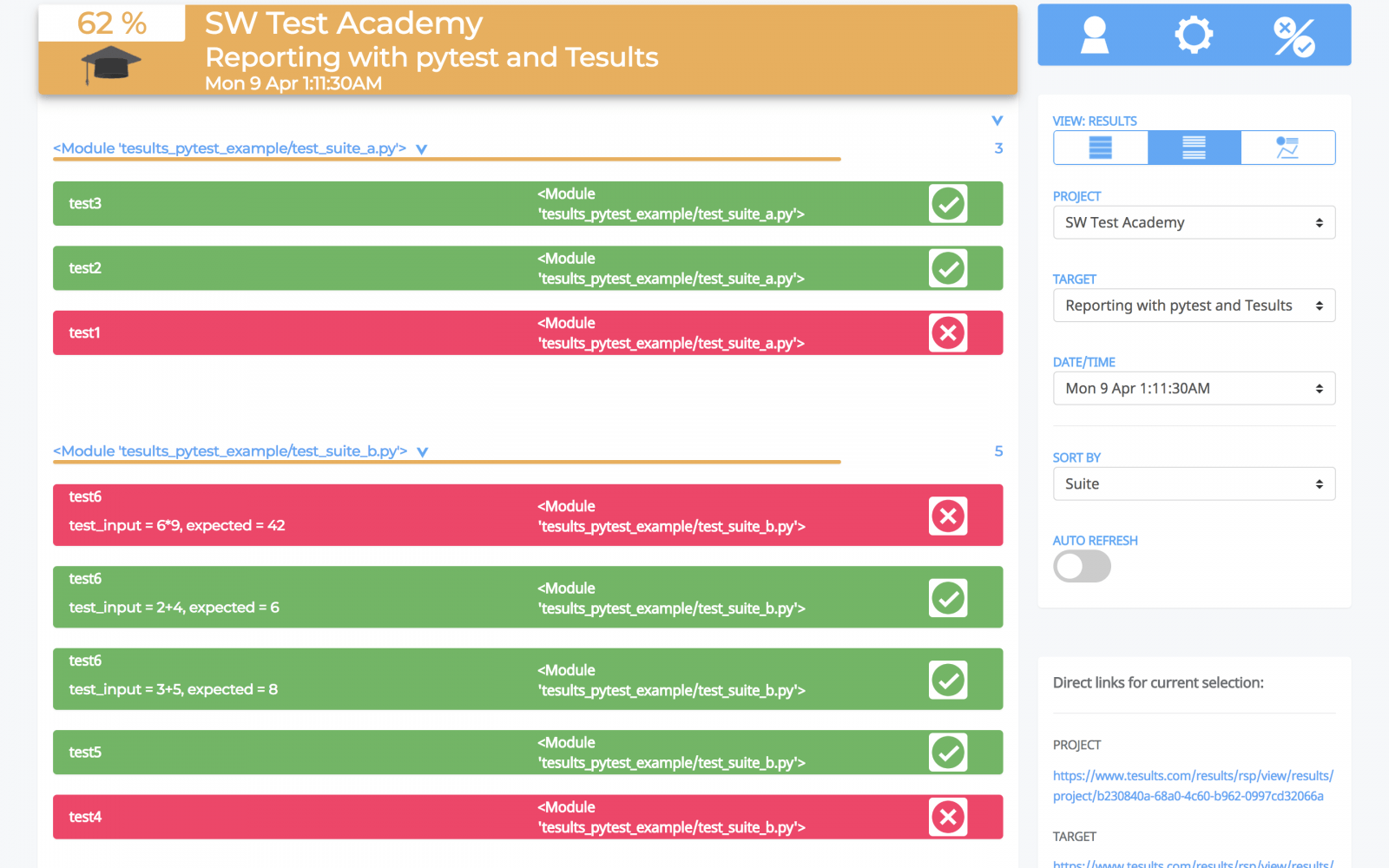

As can be seen in the output the upload to Tesults has been successful. Now we can go back to the web browser and view the results at https://www.tesults.com/results

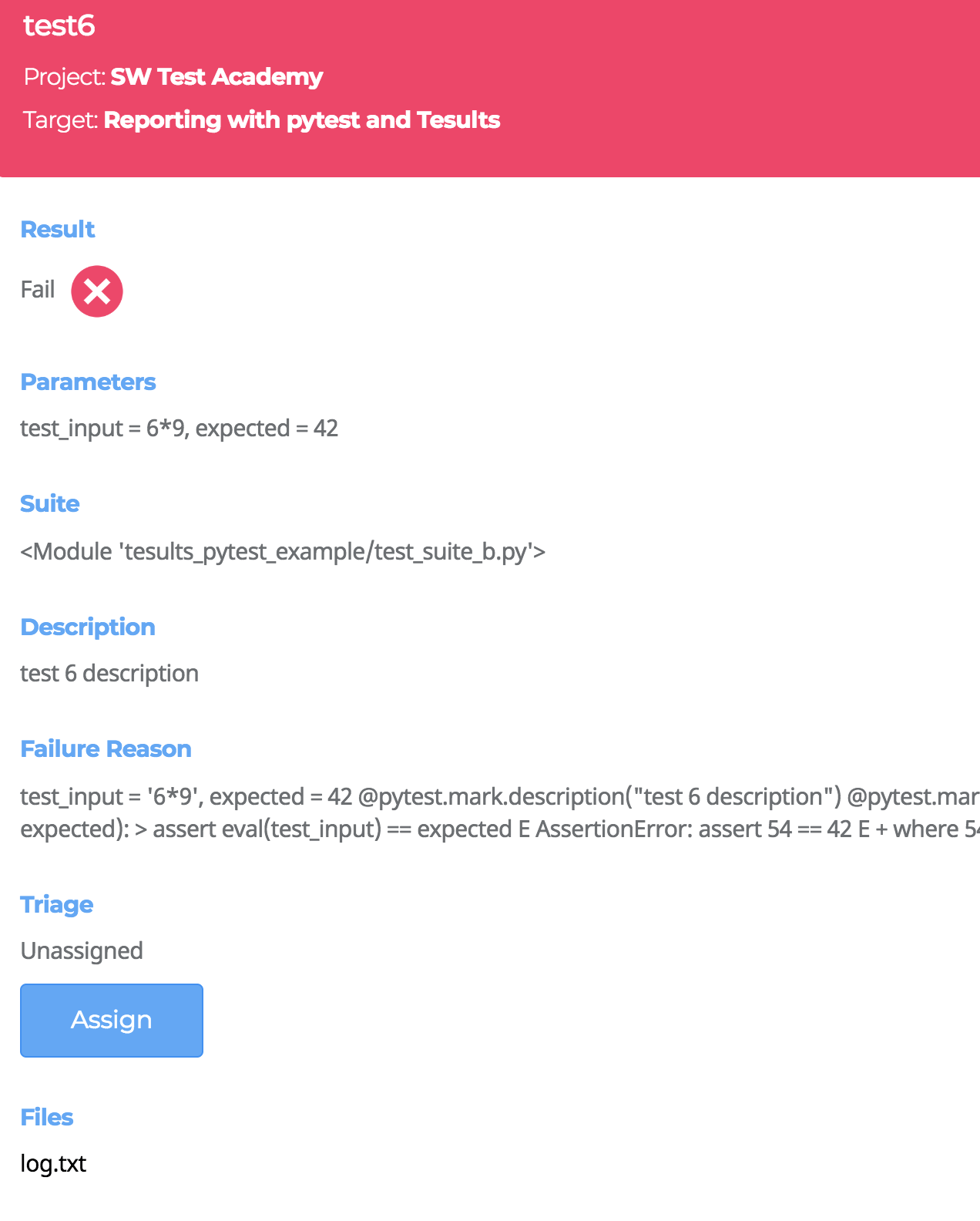

Clicking on a test case displays details:

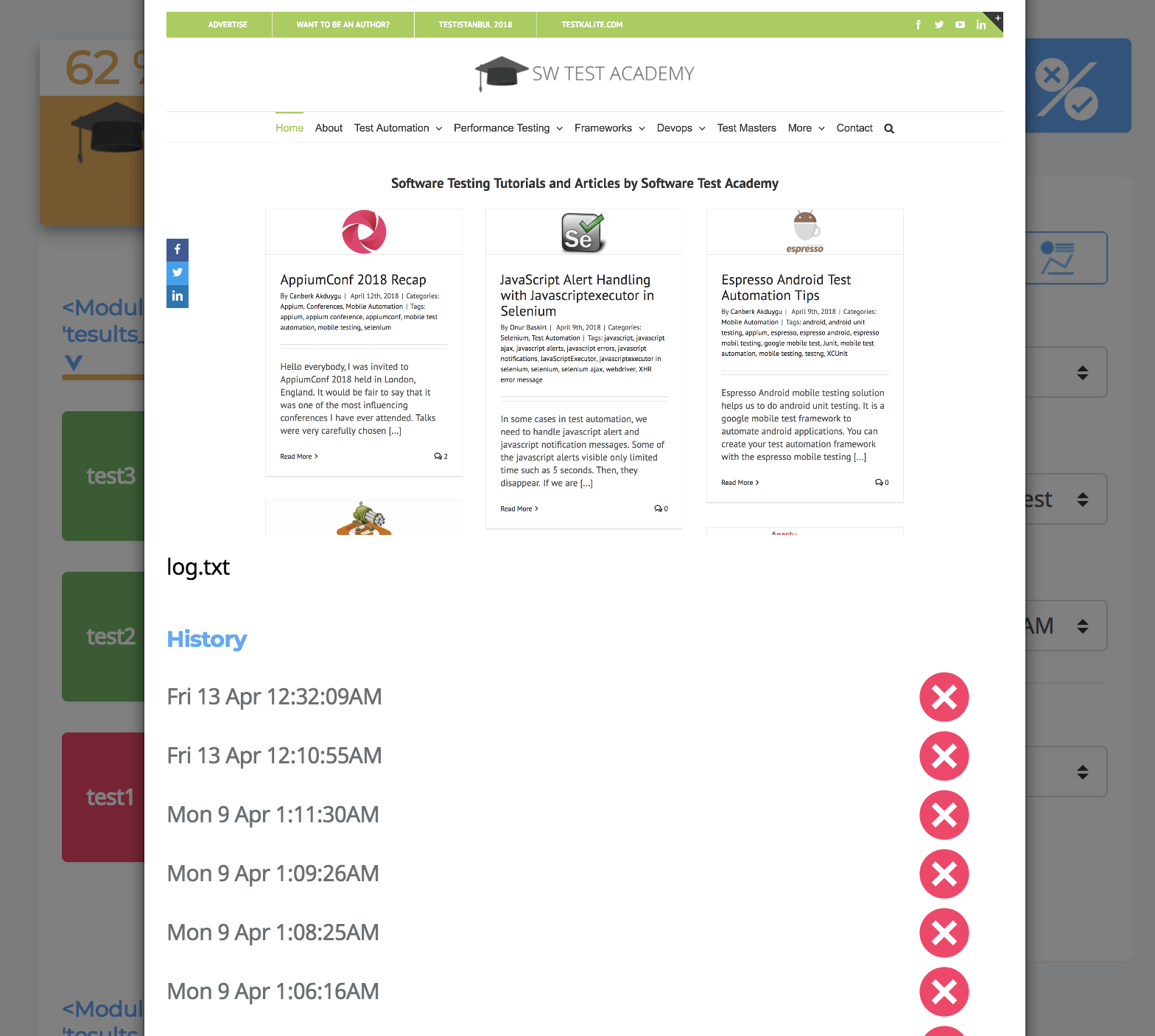

You can see the failure reason and see that the log for the test is also available.

If you upload screen captures they are shown in preview in the test case.

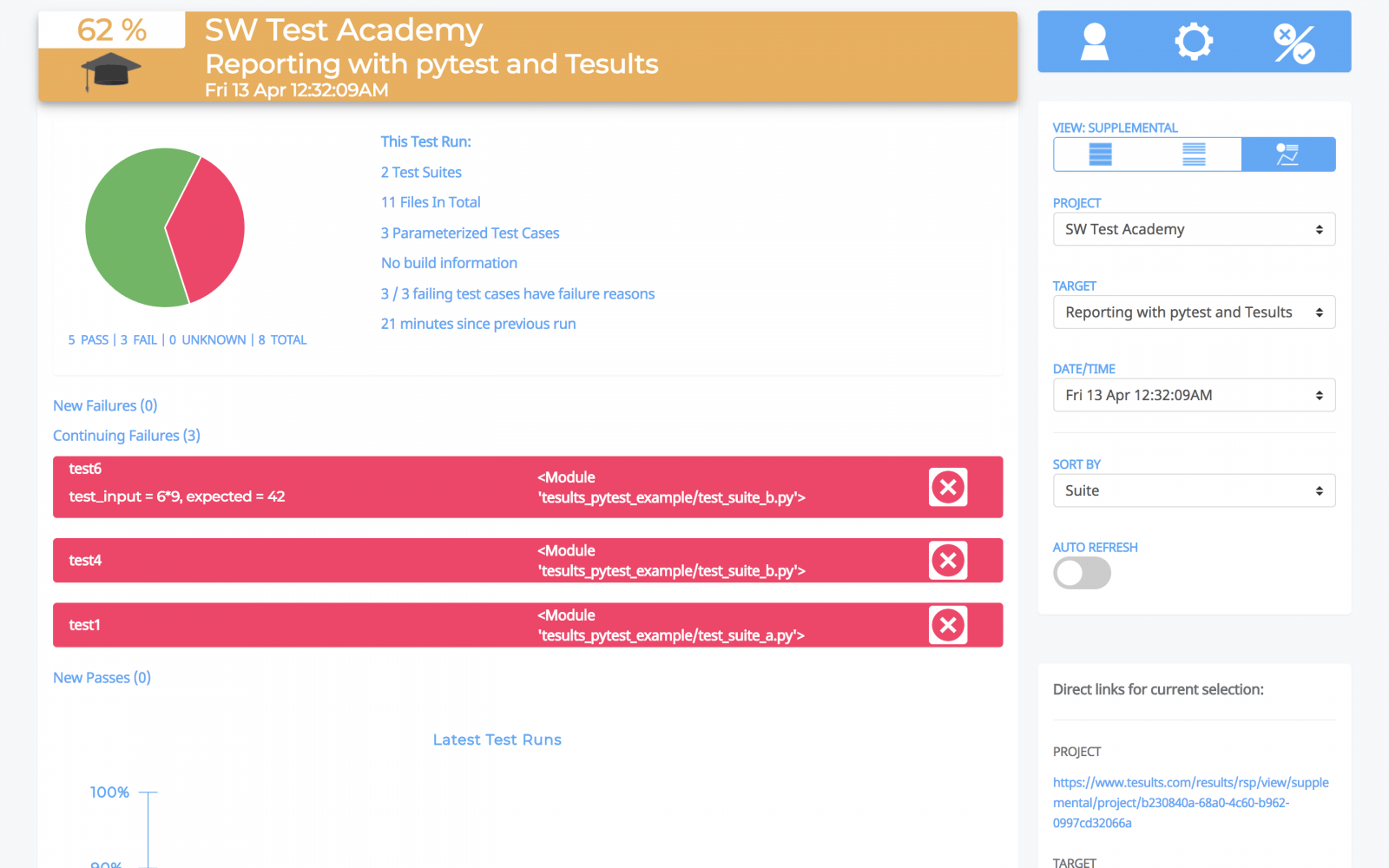

The view above becomes useful when several test runs have occurred because you can see new and continuing failures.

[fusion_widget_area name=”avada-custom-sidebar-seleniumwidget” title_size=”” title_color=”” background_color=”” padding_top=”” padding_right=”” padding_bottom=”” padding_left=”” hide_on_mobile=”small-visibility,medium-visibility,large-visibility” class=”” id=””][/fusion_widget_area]

Note: This article is written in collaboration with Ajeet Dhaliwal.

Thanks.

Onur Baskirt is a Software Engineering Leader with international experience in world-class companies. Now, he is a Software Engineering Lead at Emirates Airlines in Dubai.

1 thought on “Test Automation Reporting with Tesults and pytest”