In this article, I will share my experience in microservices testing. In recent years, software development teams have been implementing the microservices architecture to have the capability to develop, test, and deploy the services independently and faster. These architectures come with many complexities, and to test these systems effectively, we need to be aware of the system architecture very well.

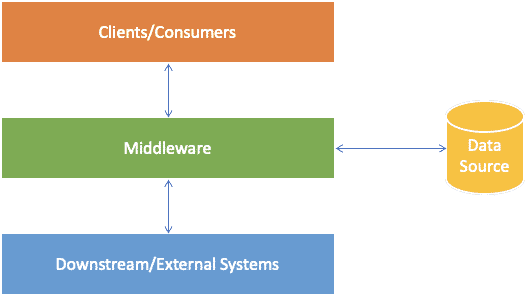

Let’s start with a simple architectural view of microservices.

Generally, we have clients/channels/consumers such as Web, Mobile Web, Mobile Apps, and Desktop. We may have some downstream or external services which do business-critical operations such as loyalty operations and customers’ data-related operations, and they may hold some critical business data. These operations and data depend on the company’s sector. We have a middleware layer between clients and the external systems that communicate, translate, and do some business operations.

* Here, ideally, we have multiple data sources for each service.

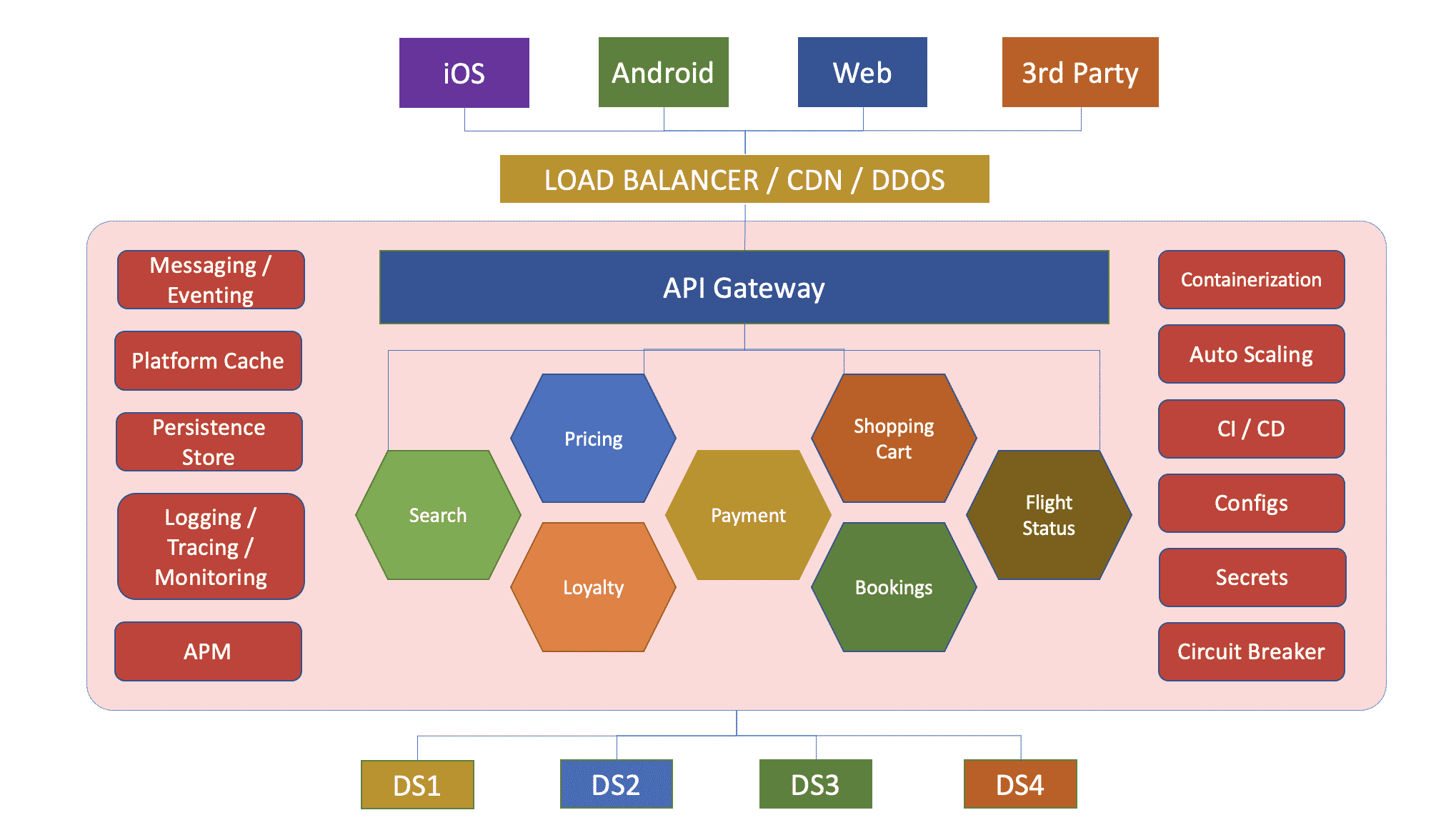

In the architecture above, we may have several services at the middleware level. They communicate with each other, clients, and external systems. To test this layer, we must also know the internal architecture of middleware. Generally, it consists of an API gateway, microservices, data stores, and other elements such as message queues.

Requests from the channels are generally forwarded and routed to the services via the gateway. We should test the gateway very carefully because all communication goes through it. After the gateway level, we have microservices. Some interact with each other and communicate with data stores, external systems, and the API gateway.

We have covered a glimpse of the microservices architecture. From now on, I want to focus on more microservices testing strategies. In a typical microservices architecture, we need to deal with the below areas and more, as you can see in the diagram below. These challenges arise complex testing problems to solve.

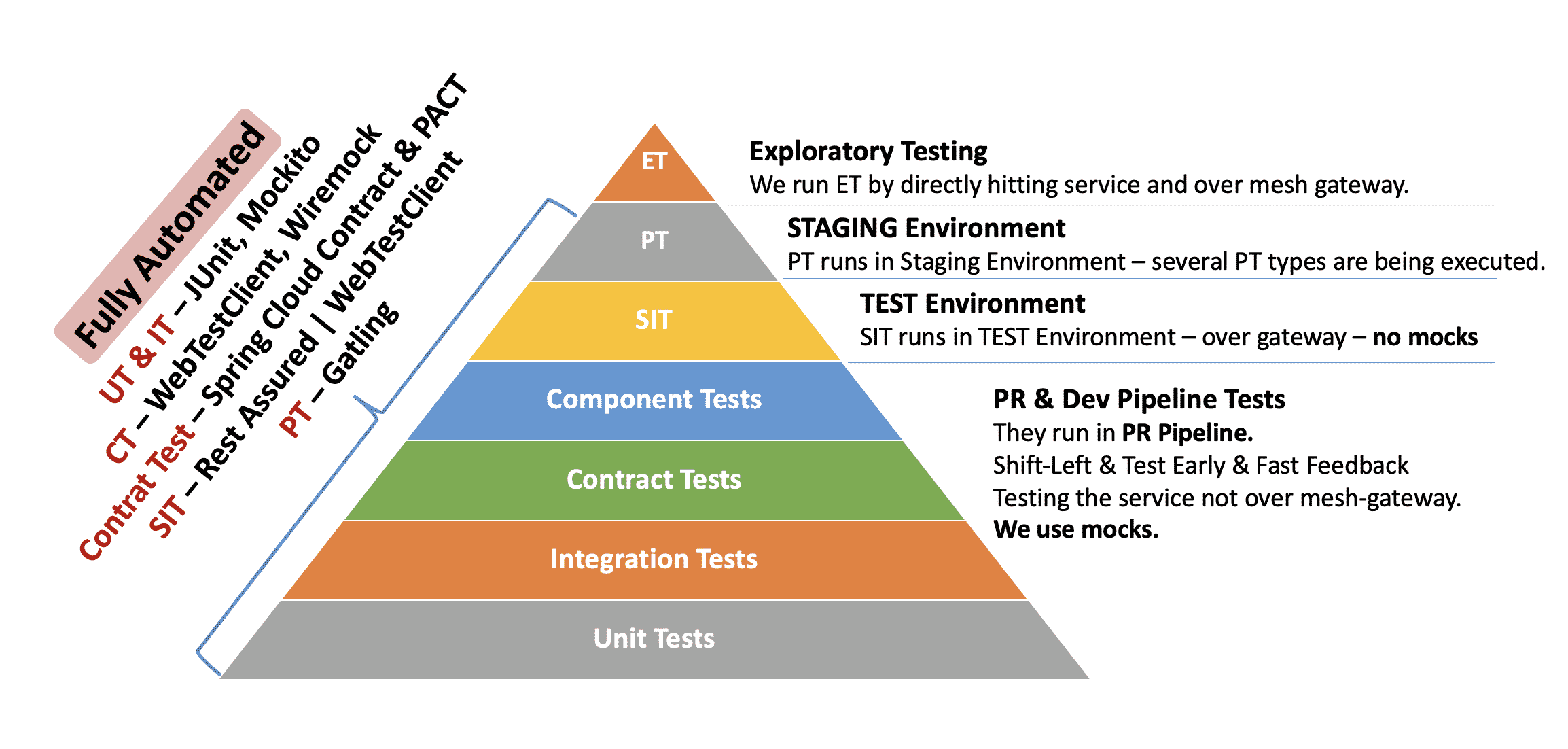

As you see, we have multiple layers and components in this architecture; thus, we need to modify the “famous test pyramid,” and we will have several test types.

Along with the common functional testing types, we should also have some other testing types, such as load testing, endurance testing, stress testing, spike testing, load balancing testing, chaos testing, data replication testing, accessibility testing, security testing, mutation testing, etc. In this way, we enhance the quality of microservices and the whole system.

If UI elements exist in our scope, we need to do UI-related testing such as UI automation, visual testing, accessibility testing, etc. But UI, related tests mainly belong to channel/consumer side testing.

We follow the testing pyramid for microservices testing, which is shown in the below diagram. We have more unit tests than component tests and more component tests than system integration tests. The count of integration tests in some conditions may not be much. It depends on the service and its integrations. Also, we are running the contract tests as early as possible. They give early feedback about the contract mismatches and run faster than functional tests.

Let’s proceed with explaining all these testing types one by one. With these testing types, we will test the microservices effectively. Still, before going further, I would like to share some categorizing these tests as Pre-Deployment and Post-Deployment.

Pre-Deployment Tests

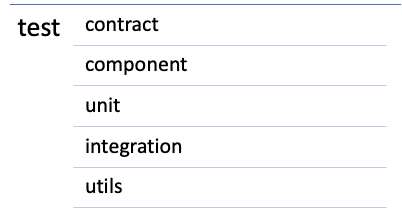

These tests are written inside the project of the service (i.e., under the test package of the service), and for each service CI/CD pipeline, we run them before the deployment phase. A sample view of these packages may look like the one below.

If you have JAVA-based microservices and if you use maven as a dependency management solution, you can initiate all of these tests in the CI/CD pipeline in the below order by using maven commands. Also, if you use CI/CD tools like Jenkins, you can put these mvn commands in your Jenkins groovy script and manage your stages. For example, you can trigger component tests with the below mvn command:

mvn test -Dtest=${your project's test path}.ct.**

Also, you can modify these commands based on your needs and microservice requirements. Then in the pipeline, they can run in the below order. (You can switch Component and Contract testing execution order and in my opinion contract tests should be run before component tests. It is also good practice for Contract First Testing.)

Now, let’s elaborate on these testing types more.

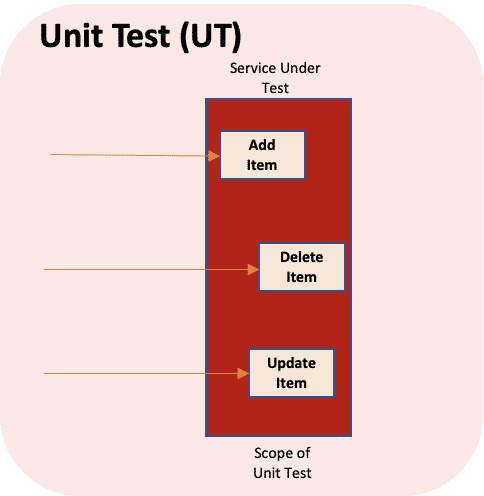

Unit Tests

A unit test focuses on a single “unit of code” to isolate each part of the program and verifies that the individual parts are correct. Generally, developers write unit tests using unit testing libraries such as JUnit, Mockito, etc. They directly call the implementation methods in unit tests. They do not need to start the service locally and hit it via its endpoint.

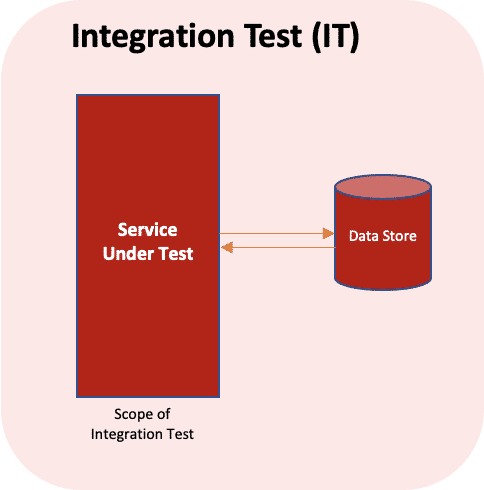

Integration Tests

They verify the communication paths and interactions between components to detect interface defects. Such as data store connections. We also do not need to start the service locally for integration tests. We can call any implemented method for the required test, but we need to check the integration with the external systems, such as a DB connection or other service connection.

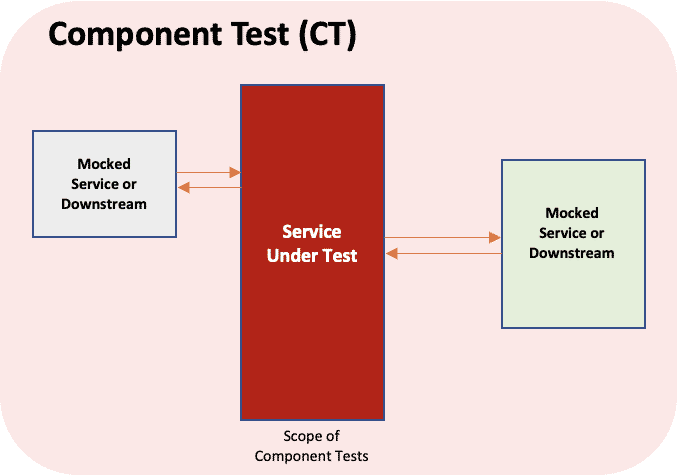

Component Tests

In a microservice architecture, the components are the services themselves. Here we need to isolate each component (service) from its peers or collaborators and write tests at this granularity. We can use tools like WireMock to mock the external system or other services. Also, we can use in-memory databases to mock DBs but this will create a bit more complexity. Ideally, we should mock all the external dependencies and test the service in isolation. In component tests, we should start the service locally and automatically (If u have a Reactive SpringBoot WebFlux project, you can use WebTestClient and you can use @AutoConfigureWebTestClient annotation to do this) and when service started, we should hit its endpoint to test our functional requirements.

We need to cover most of the functional test scenarios at this level as much as we can because component tests run before deployment and if there is a functional problem that exists in our service, we can detect this before deployment. It complies shift-left approach and early testing.

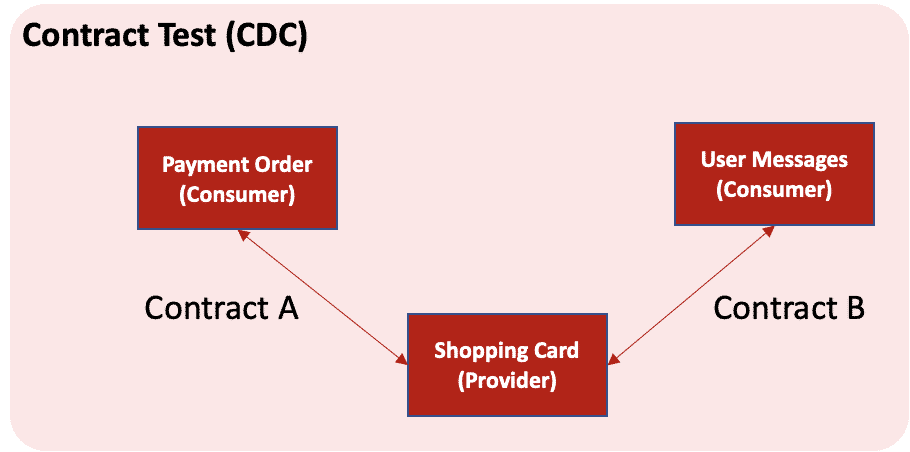

Contract Tests (Consumer-Driven Contract Tests)

Contracts are a series of agreements between the service provider and the consumer. The providers can test their service against these contracts to ensure they are not breaking any consumers, thereby helping to keep the service backward compatible.

If you use Spring Boot, then you can use Spring Cloud Contract, or you can use PACT for contract testing. The pact was written for Ruby, but then it is now available for many different languages e.g. JavaScript, Python, and Java.

Before starting contract tests, there should be an agreement between consumers/channels and providers/middleware/external systems. Then, we should start to use the defined contracts to write contract tests.

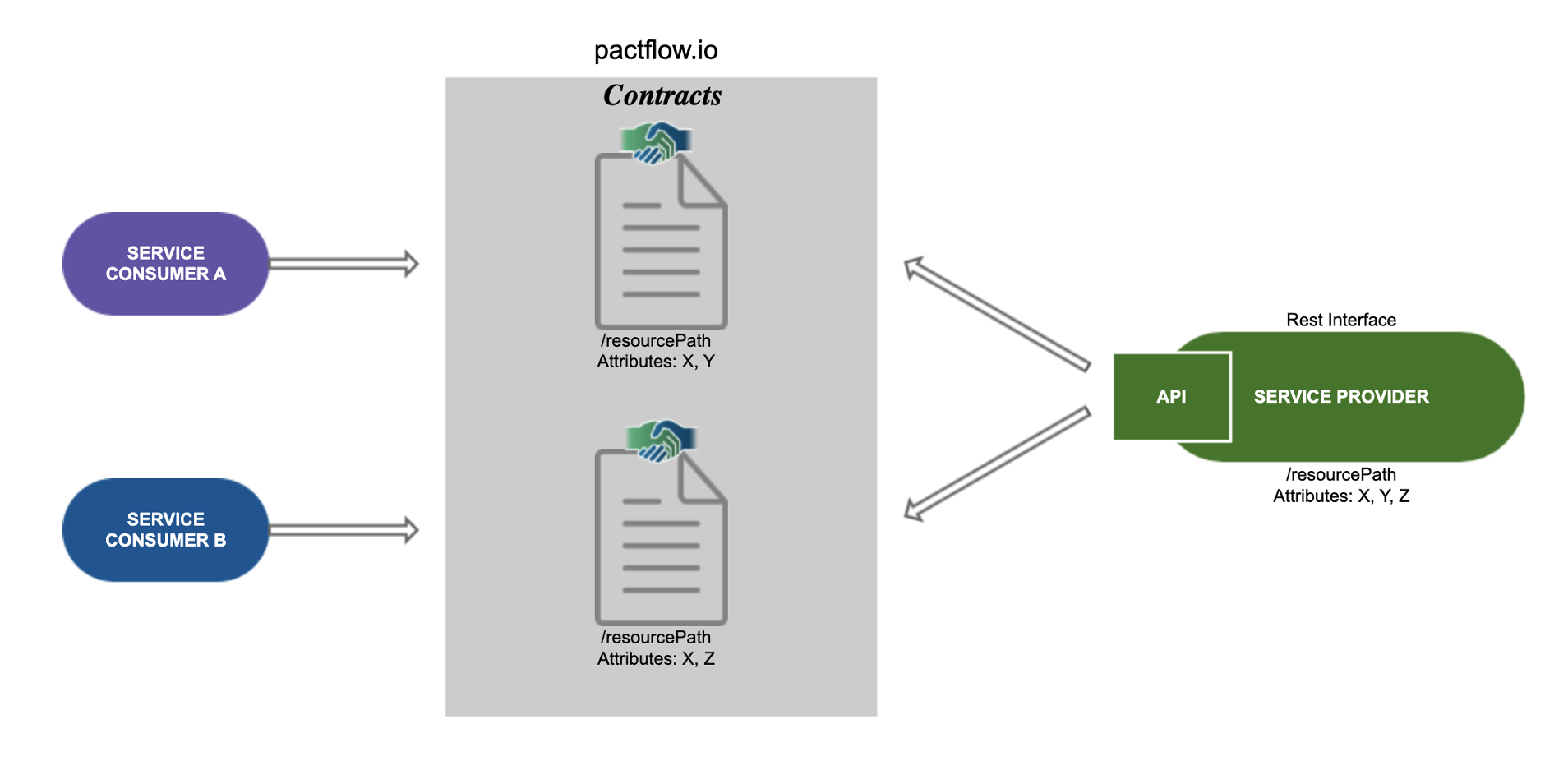

The idea behind Consumer-Driven Contract Testing (CDC) is that the consumer service runs a suite of integration tests against the provider service’s API. These tests generate a file called contract, and the provider service can then use it to verify contract expectations between consumer and provider.

Before starting contract tests, there should be an agreement between consumers and providers. Then, we should start to use the defined contracts to write contract tests.

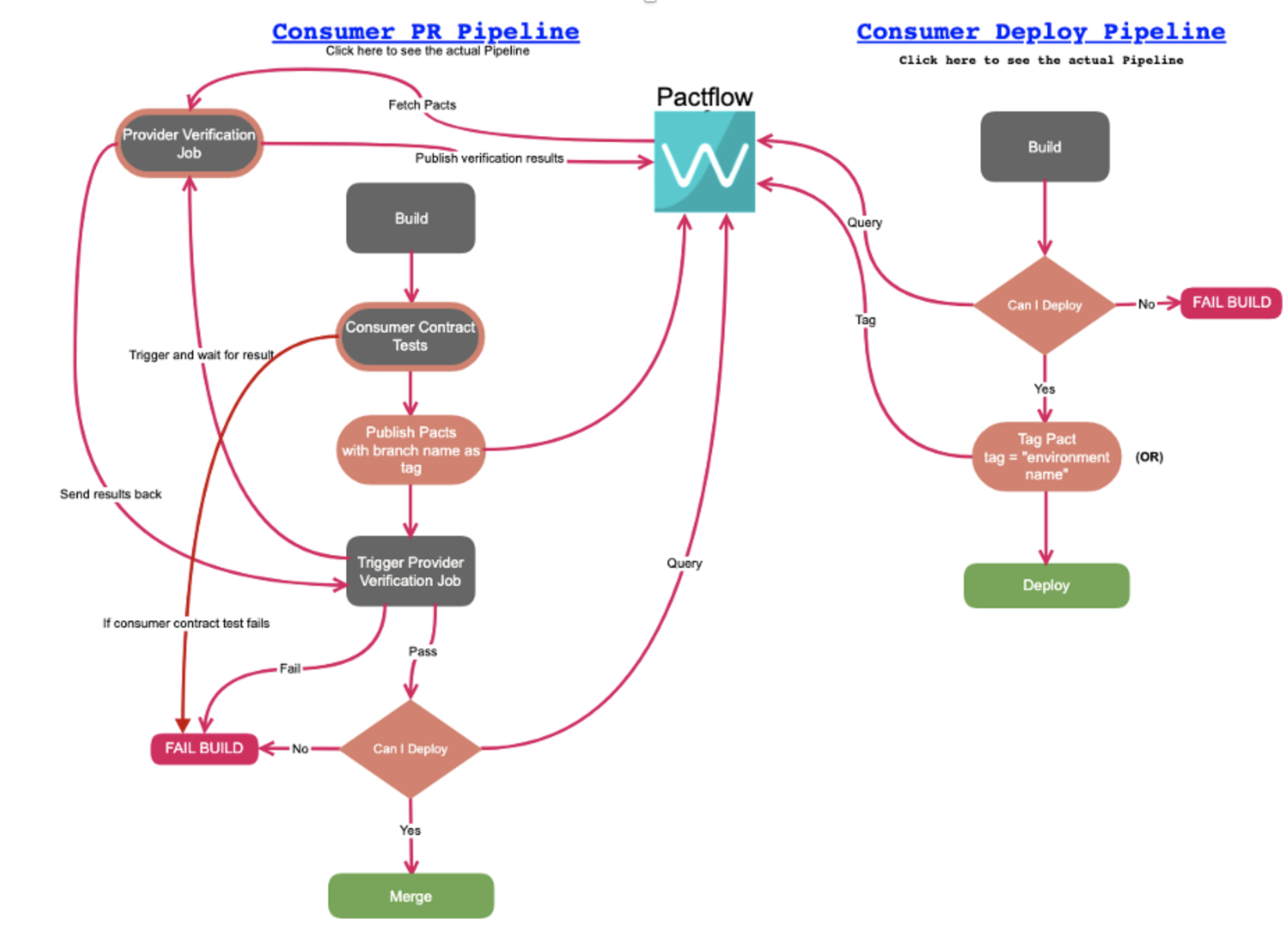

Below is an overview of the consumer contract tests integration in the PR pipeline and how the deploy pipeline works with pact integration.

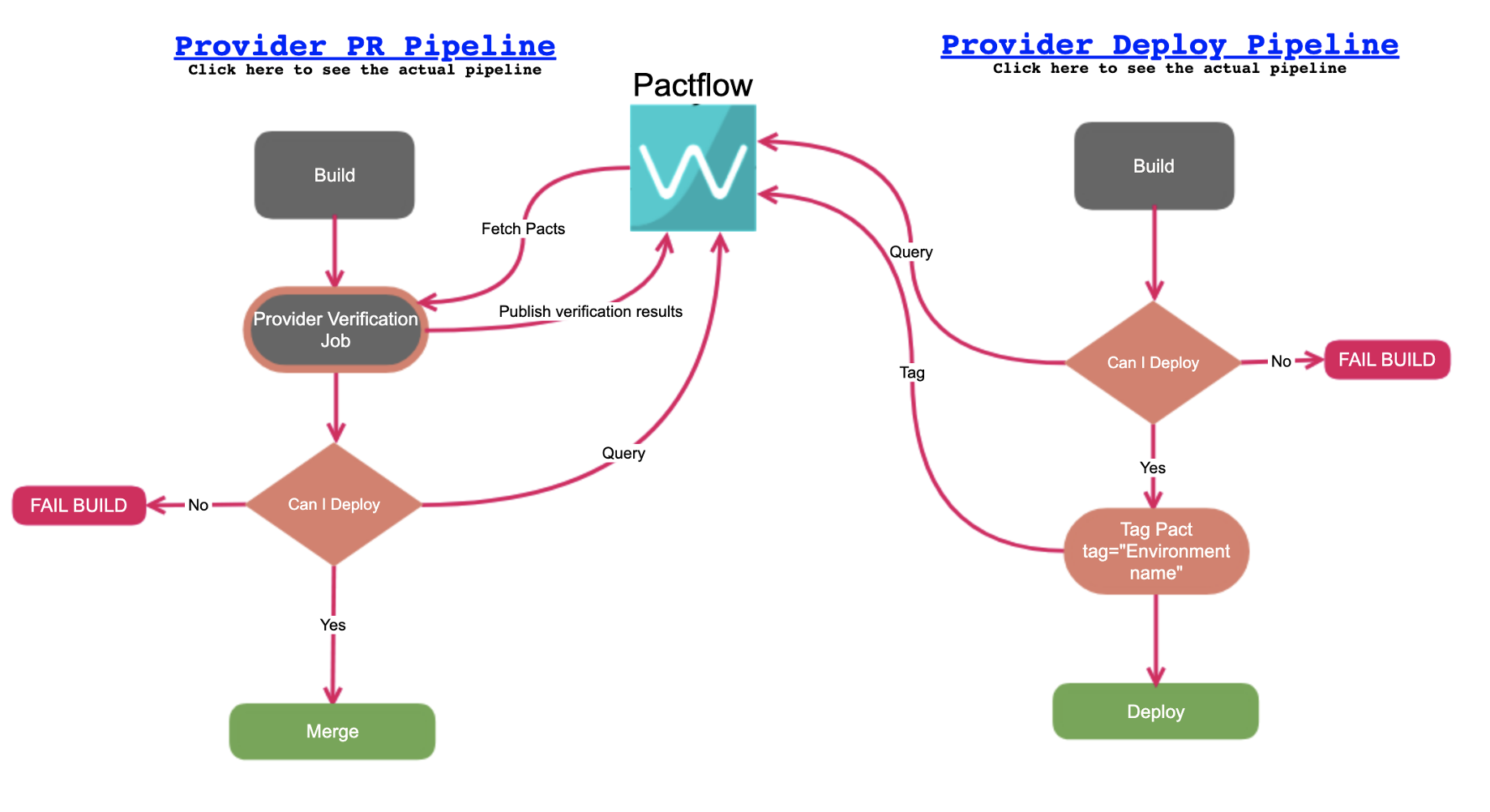

Below is the provider verification integration in the PR pipeline.

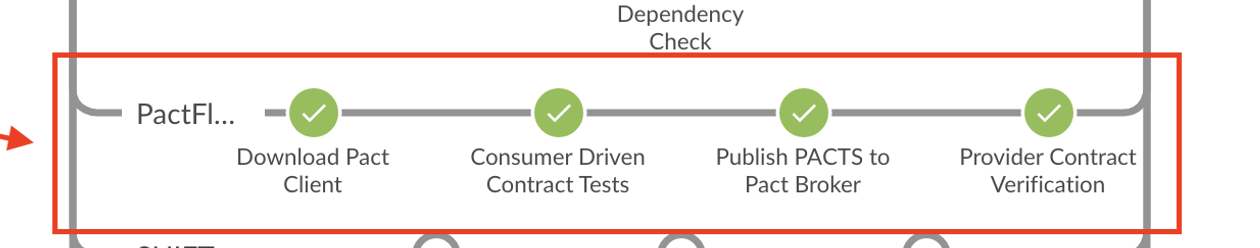

Both consumer and provider contract tests are running in the PR pipeline, as shown below.

Post-Deployment Tests

System Integration Tests (E2E Tests)

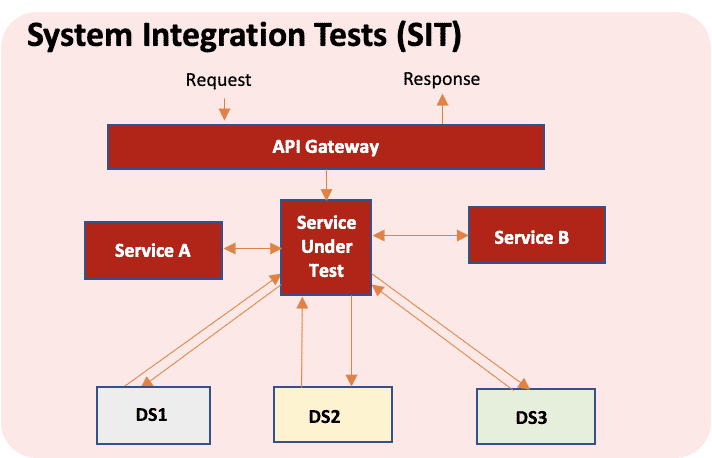

System Integration Tests (SIT) ensure we build the right system and how the application behaves in an integrated environment. We test the important user flows from start to finish to ensure their behaviors are as expected. We should not use any mocks or stubs for system integration tests. All system components should be integrated. This way, we can ensure that system-level integration is working as expected. These tests take more time than others. Therefore, we need to test the critical business flows at the SIT level.

If you have JAVA based tech stack, you can use Rest-assured to write SIT tests. For these tests, we follow the below procedure.

- Prioritize the backlog.

- Gather high-level business rules.

- Elaborate on those business rules and create the steps of them.

- Prepare required test data, endpoints, and expected results.

- Start to write test scenarios based on the above inputs.

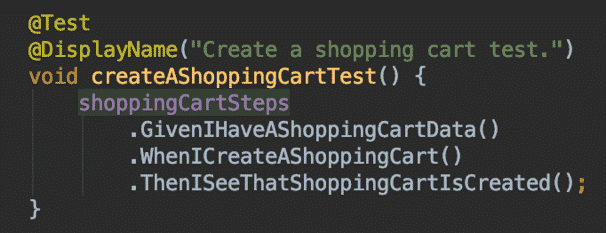

After exploration and discovery, we create our test scenarios and then start to implement SIT tests. You can also use cucumber or any other BDD library to add a Gherkin flavor to your tests or create your step classes to hide the details inside step classes’ methods.

Performance Tests

After deployment to the test or stage environment, we start to run the service-level performance testing. In microservices, we can do performance testing in several ways. First, we mock all external dependencies and hit the service endpoint directly to measure standalone service performance testing. This test gives us an isolated service performance which means we focus on the service’s performance.

For these tests, we can use several technologies or tools such as Gatling, JMeter, Locust, Taurus, etc. If you have a JAVA-based stack and choose Gatling as a performance tool, I suggest creating a maven-based project and integrating your tests into your pipeline. Also, we can check the server behavior using some APM tools such as NewRelic, DynaTrace, AppDynamics, etc. These tools give extra details for the service and the system performance, and it is nice to use them along with performance test results.

Also, we test the service performance by hitting the service over the API Gateway. This time we will add the gateway factors in our performance tests. In real life, all channel requests pass through the gateway before reaching the services. That’s why it is also important to do performance testing over the gateway. Here, we may not use mocks or stubs. Still, if we communicate with external systems, we should think about performance test data very carefully before starting performance tests. If external systems are slow, it will affect our performance test results, and by using APM tools, we should figure out the problematic areas.

If we have a hybrid server architecture, which means if some of the servers are on-premise and some are in the cloud (AWS, Azure, etc.), it is nice to do performance testing for each server. This way, we will know about on-premise and cloud performances.

Also, in a hybrid architecture, data replications happen between on-premise and cloud servers. Therefore, we need to do XDCR (Cross Data Center Replication) tests to determine the maximum data replication performance. For example, we create a shopping cart on-premise, read it in the cloud server, update it on-premise, delete a product in the cloud, and read it on-premise again. We can generate many scenarios, and all of these scenarios should run flawlessly with given pause intervals between each operation.

Also, we need to perform extra performance tests. They may not be in the pipeline, but we must consider them to evaluate system performance and stability. We perform spike testing by applying sudden spike loads and check the auto-scaling functionality in these tests.

Also, we add endurance test jobs in our CI/CD platform to parametrize our test execution time and run the endurance tests for an extended period to check the service behavior and stability over a long period.

We can also check load-balancing factors in performance testing. In these tests, we go through the load balancer, then the API gateway, and then the services.

Security and Vulnerability Tests and Scans

In the service pipeline after deployment, we should add automated vulnerability and security scans by using some tools such as Zed Attack Proxy, Netsparker, etc. In each new PR, we can scan our service with OWASP security and vulnerability rules. If you use ZAP, you can refer here and here to scan your APIs.

sh "/zap/zap-full-scan.py -d -m 5 -r zapreport.html -t http://${example-service}.svc:8080"

docker run -t owasp/zap2docker-stable zap-full-scan.py -t https://www.example.com

Chaos Testing

Chaos testing tests the resiliency and integrity of the system by proactively simulating failures. It is a simulated crisis for the system and its response to it. This way, we know the system behavior when unplanned and random disruptions happen. These disruptions can be technical, natural, or disasters like an earthquake affecting the servers.

We can use chaos testing tools such as chaos monkey, which randomly terminates virtual machine instances and containers that run inside your production environment. In this way, we can test the system’s resiliency.

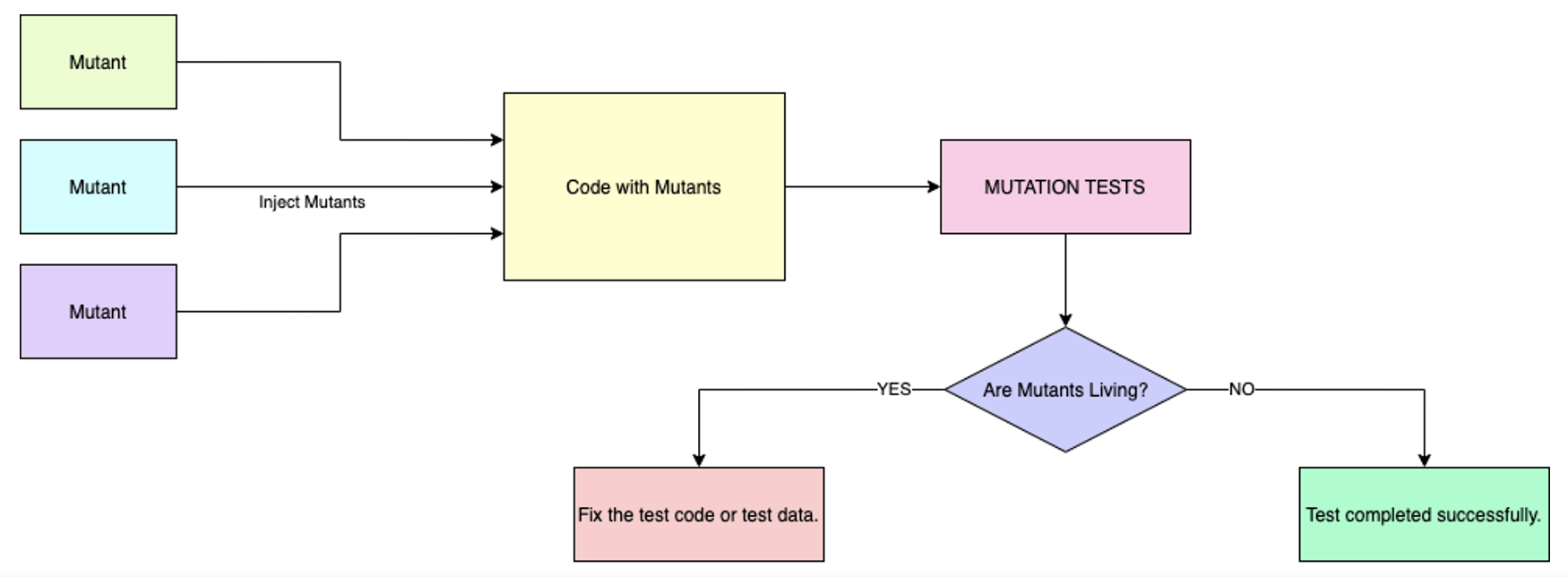

Mutation Testing

Mutation testing is another technique in which we inject mutants into our codes and check whether they are killed or alive after the test execution. If these mutants are killed, it is a good sign that our tests detect the failures; if not, the test code or test data should be improved. Mutation testing ensures the quality of our tests, not the service code itself, and they can be performed as early as possible in the PR pipelines.

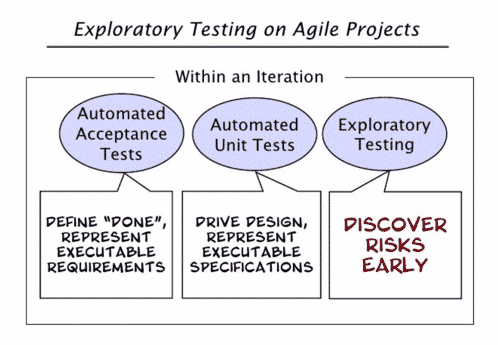

Exploratory Testing

Exploratory testing is an unscripted technique used to discover unknown issues during and after the software development process. We should do exploratory testing throughout the testing life cycle. We generally start exploratory testing after the requirement analysis phase as early as possible. This way, we can find the risks and problems earlier, which also helps automated test efforts. We use many inputs and outputs of exploratory testing sessions for automation. Especially for API exploratory testing, we use postman or insomnia tools. For exploratory testing details, you can check this article.

Test Data Approach

One of the biggest challenges in testing and automated tests is test data. For test data, I suggest the below guidelines:

- It is nice to have test data generation services and call those service endpoints to get freshly created test data for test usage.

- If there is no chance to create the test data automatically, better to do test data requirement analysis and create the test data manually or get help from the relevant team for test data needs.

- Try to create new test data before starting and delete it after test completion.

- In Test and Stage environments, I suggest masking the critical test data.

Pre-Release Testing Approach

Before releasing our services to our customers, we do some pre-production verification. The service team should do this verification to cover their new changes and critical scenarios before going to live.

If the service pipeline is green, SIT tests passed, PT tests passed, UAT is approved, and the final checks on the pre-production environment passed, we are ready to proceed further to the production deployment. Whenever the deployment to the production environment has been done, and the new version of the service is being consumed in production, we should monitor it with APM and logging tools.

If you have any questions or doubts, please do not hesitate to write a comment and get in touch with the swtestacademy community.

Thanks for reading.

Onur Baskirt

Onur Baskirt is a Software Engineering Leader with international experience in world-class companies. Now, he is a Software Engineering Lead at Emirates Airlines in Dubai.

Excellent reference article, Onur. I was a bit confused on the top diagram where it shows as if several services shares the same Data Store which is normally not the case in Micro-services architecture. But i guess it is not the main point of this post. Very good to see the full overview of testing activities on Microservices environment. Thanks.

HiSinan, thank you so much. You are right, in microservices, each service is a system apart with its own database. As you know, this is a very deep topic and I just put the data source as a single entity. Here also there is a good document for SAGA pattern maybe you are interested in https://blog.couchbase.com/saga-pattern-implement-business-transactions-using-microservices-part/. BTW, as I know in the current implementation, we are using multiple clusters and buckets and defined them in the application-local.yml config file.

What is an accessibility testing in case of microservices?

If your test scope also covers the channels/clients testing then you need to think about all UI testing activities such as accessibility testing, Usability testing, UI automation, Visual regressions, and so on.

Hello Onur do u have any automation testing strategy document for testing microservices and cloud native applications

I tried to summarized them in this article. I have more detailed automation training documents and presentations but they are company confidential and they are in our company portal as courses. You can also check Martin Fowler’s blog on microservices testing strategies.

Hi Sima,

Check out this https://gethelios.dev/blog/automating-backend-testing-in-microservices-challenges-and-solutions/

Hi,

can you please elaborate how to do API Gateway testing where two gateways are involved in the client API call to server(1.external client API gateway and 2.Service gateway)

what are all the things required to test the requests in postman.

or how can we test themq

what are the ways.

It is a great question.

– First of all, it is wise to test API gateway multiplexing logic (load balancing) which means which request will go with the API gateway based on your specific setup and based on some specific conditions.

– 2nd, what happens if one or both API gateways are down? Better to test these kinds of catastrophic scenarios.

– 3rd, you can test your API gateway routing functionalities.

– 4th, you can test rate-limiting functionality.

– 5th, you can test circuit-breaker functionality.

– 6th, you can test headers functionality which your gateway can process if you have specific conditions for some of the headers.

– 7th, you can test the performance of the gateways, when both of them are working, when each one is working, etc.

– 8th, you can do some security and vulnerability checks on both.

You can add some other scenarios based on your requirements and setup.

First, you need to up two API gateways locally also some services must be up, which has integration with these gateways.

Then, do the test when both gateways up.

Then, terminate one gateway and do the same tests.

Then, terminate the other gateway and test the system.

For postman, you need a request which should have

URL, headers, payloads, query parameters, etc. These are all related to your system details.

Hi Onur,

Thanks for the article.You mentioned Pre-Deployment Tests and Post-Deployment Tests. As a tester, in Pre-Deployment Tests what exactly are the duties of testers here? I understand that it is mostly tests related to software developers. Yes, in Post-Deployment Tests We play an active role however I’m not sure about our role in Pre-Deployment Tests.

Hi Mert, for pre-deployment tests Quality Engineers are implementing component tests and for some services contract tests but these can be also implemented by Developers according to your team decisions. In some cases, we had shared responsibilities. If there are not many QEs in a team, these low level tests can be implemented by developers.

I suggest, when new requirements come from business, QE should analyze and understand the needs and requirements, then creates test scenarios, then these scenarios should be reviewed and categorized by QE a.k.a QA, Product Owner, Dev and if possible business user like unit, component, contract, SIT, E2E UI / Acceptance, etc.

Then, the automation work load can be shared Devs and QEs. Unit, component, contracts tests can be implemented by Devs if there are not enough QE available or lack of skill set.

In our case, QE engineers are implementing component, SIT, and E2E tests. Unit and Integration tests are implemented by Devs.

For some cases, we got help from devs for Component and SIT tests implementation as well.

The good part is, each microservice has its own repository and we are writing all tests except UI E2E tests inside the microservice repository by using same language and devs and quality engineers can collaborate effectively in this way.